USA – Nvidia CEO Jensen Huang recently revealed groundbreaking advancements, including next-generation chips, innovative large language models, a mini artificial intelligence (AI) supercomputer, and a strategic partnership with Toyota.

These announcements highlight Nvidia’s aggressive expansion in the tech industry as the world’s second-most valuable company.

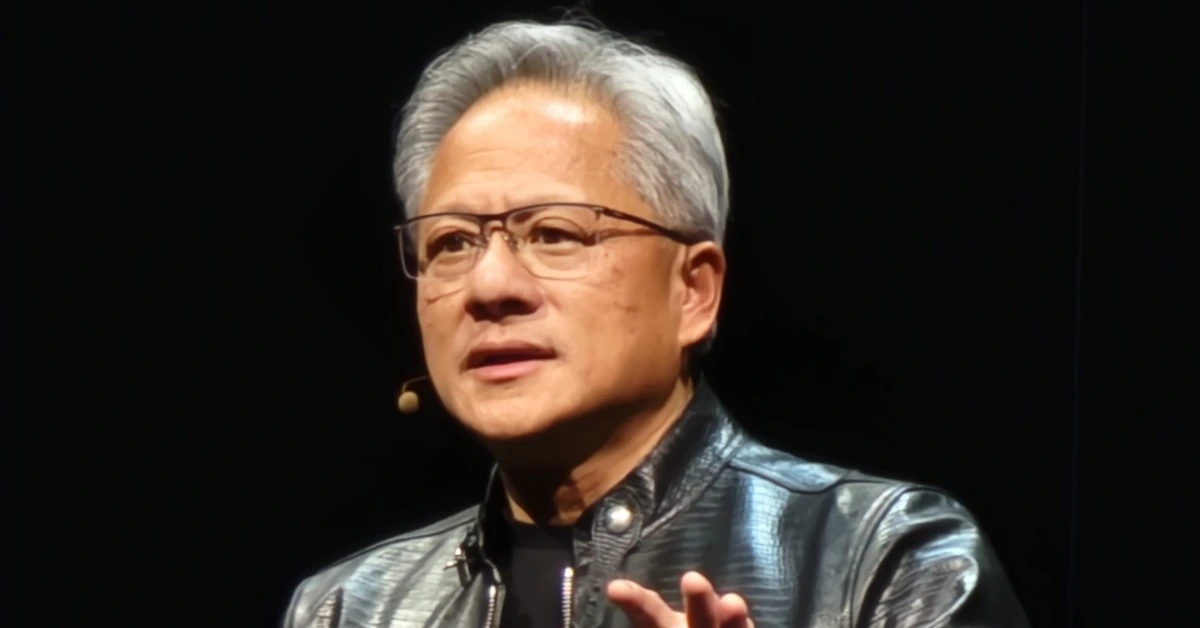

Speaking at the CES trade show in Las Vegas, Huang expressed pride in the company’s progress, calling it an “extraordinary journey, extraordinary year.”

Dressed in a shiny version of his signature leather jacket, he addressed a packed audience as Nvidia’s stock reached a record high of US $149.43 just hours before his keynote.

The surge in chip stocks was fueled by strong AI demand, signaled by Foxconn’s record fourth-quarter revenue.

Huang affirmed the robust demand for AI technology, stating that Nvidia’s latest hardware architecture, Blackwell, is now in full production.

Despite initial delays caused by technical issues, the new architecture powers Nvidia’s most advanced gaming chips, the GeForce RTX 50 Series.

These chips, designed for gamers, creators, and developers, integrate AI-driven neural rendering and ray tracing to enhance graphics performance.

They also run generative AI models twice as fast as the previous generation while consuming less memory.

Nvidia claims that Blackwell chips can process trillion-parameter large language models with up to 25 times lower cost and energy consumption compared to their predecessors.

This capability positions Blackwell to drive a new demand cycle, outperforming previous architectures like Hopper and Ampere, and competing with products from AMD, Intel, and Google.

Mini AI supercomputer

Another highlight of the event was the unveiling of Project DIGITS, a mini AI supercomputer powered by the new Nvidia GB10 Grace Blackwell Superchip.

This superchip delivers a petaflop of AI computing speed, capable of performing a quadrillion calculations per second.

It is designed for prototyping, fine-tuning, and running large AI models. Users can perform inference—applying new data to a trained AI model—on their desktops before deployment.

The superchip connects an Nvidia Blackwell GPU to an Nvidia Grace CPU via NVLink-C2C, enabling high-speed communication between chips.

The rise of Agentic AI

Huang highlighted the increasing demand for computing power driven by the rise of agentic AI, where AI systems perform automated tasks by interacting with other bots.

He explained that AI workloads are expanding as AI systems begin “thinking, reflecting, and processing internally.”

To support these advancements, Nvidia introduced a new family of language models called the Nvidia Llama Nemotron.

Based on Meta’s open-source Llama model and fine-tuned for enterprise use, Nemotron is designed for agentic AI applications.

It enables developers to create AI agents for tasks such as customer service and fraud detection.

The Nemotron models come in three versions: Nano (cost-effective), Super, and Ultra (offering the highest accuracy and performance).

Cosmos: World foundation models

In robotics, Nvidia unveiled Cosmos, a platform for creating generative world foundation models.

These AI systems simulate real or virtual environments, enabling developers to generate synthetic data for training robots and autonomous vehicles.

Using text, image, or video prompts, developers can create virtual worlds. Cosmos, available under an open license, was trained on 20 million hours of video to understand the physical world. It can generate captions for videos, aiding the training of multimodal AI models.

Lastly, Huang announced a partnership with Toyota, the world’s largest automaker. Toyota will build its vehicles using Nvidia’s Drive AGX Orin, a hardware and software platform that supports advanced driver assistance systems and autonomous driving.

XRP HEALTHCARE L.L.C | License Number: 2312867.01 | Dubai | © Copyright 2025 | All Rights Reserved